I talked about Ollama before as a way to run a Large-Language-Model(LLM) locally. This opens the door to try out multiple modals at a low(er) cost (although also a lower performance) and could be interesting if you are not allowed to share any data with an AI provider. For example you are a developer but your employer doesn’t allow you to use AI tools for that reason.

If this is a use case that is relevant for you, than I have some good news for you. With the latest version of JetBrains AI Assistent(available in JetBrains Rider but also other IDE’s) you can now use

Let me show you how to use this:

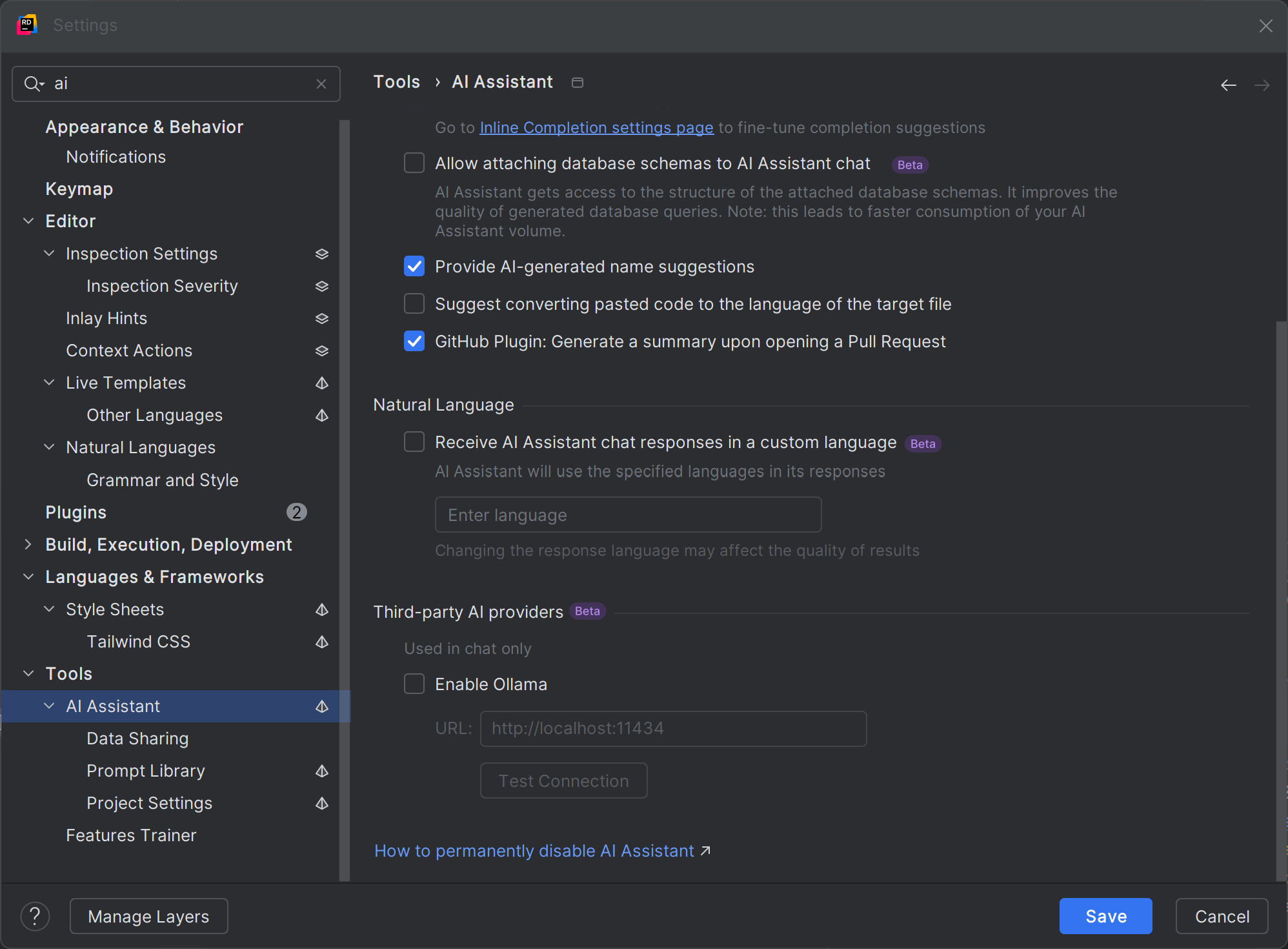

- Open JetBrains Rider(or any other IDE that integrates the JetBrains AI Assistent)

- Hit Ctrl-Alt-S or go to the Settings through the Settings icon at the top right

- Go to the AI Assistant section under Tools

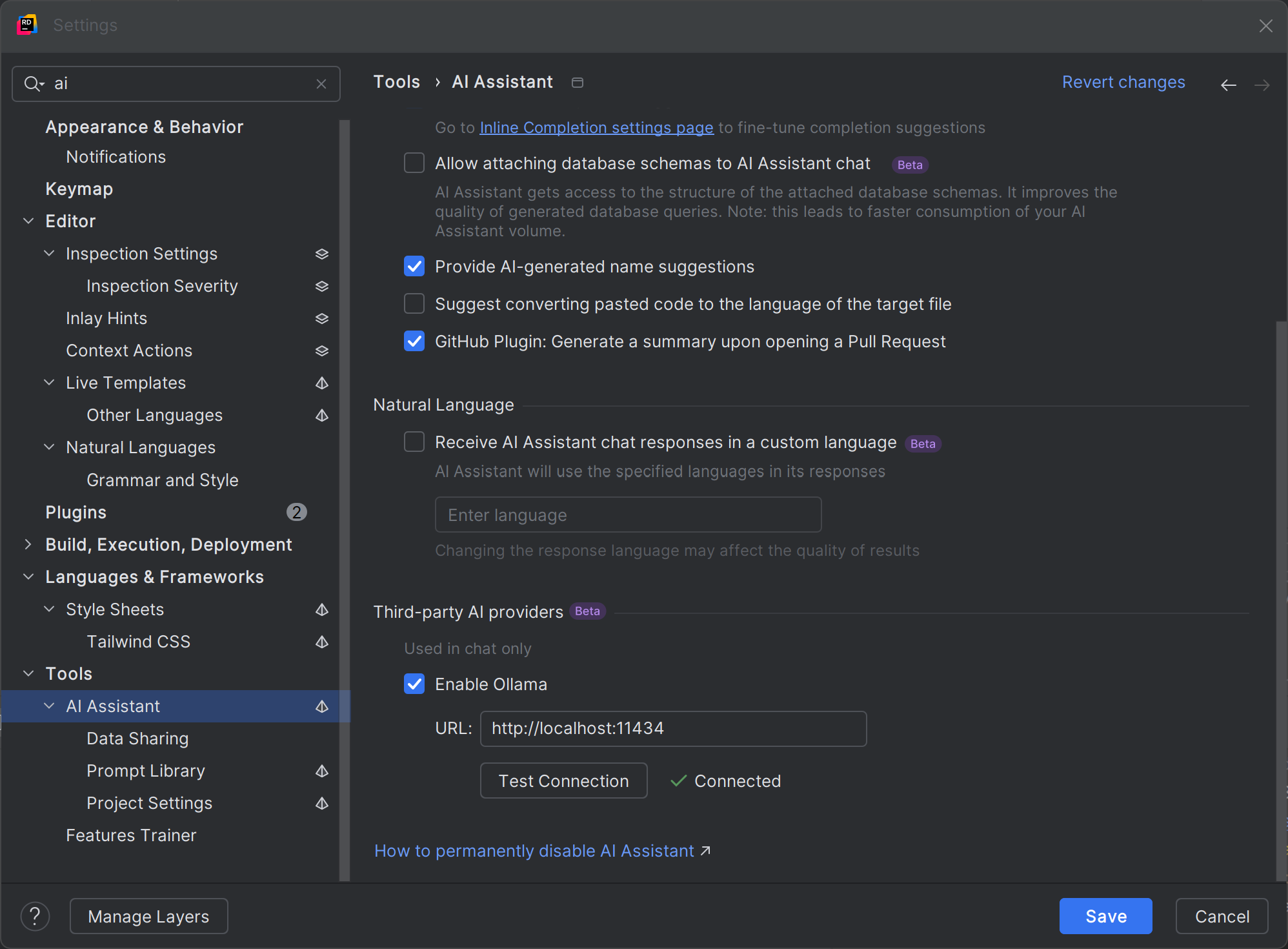

- Check the Enable Ollama checkbox.

- A warning message appears about Data Sharing with Third-Party AI Service Providers.

- Click OK to continue

- Hit Test Connection

Now we can use a local language model in the chat window.

Another way to achieve this is directly through the chat window:

- Click on the current language model in the AI Assistant window

- Click on Connect… in the Ollama section

- After the connection is made, you can select any of the locally installed models

Remark: At the moment of writing this post, the local language model cannot be used at other places.

A nice feature of the JetBrains AI assistent is that it offers a prompt library that you can tweak for specific purposes. For example, here is the prompt to write documentation for a C# code file:

Remark: The first time I tried to use the JetBrains AI Assistant I got stuck on an activation. I was able to solve it by logging out through Manage Licenses and login again. (More info here)

More information

Running large language models locally using Ollama